Claude Cowork: What an Autonomous ‘Digital Coworker’ Means for Enterprise AI Governance, Security, and Trust

How to govern an autonomous digital coworker like Claude Cowork with structured data, access controls, audit logs, and trust metrics for secure enterprise use.

Claude Cowork: What an Autonomous ‘Digital Coworker’ Means for Enterprise AI Governance, Security, and Trust

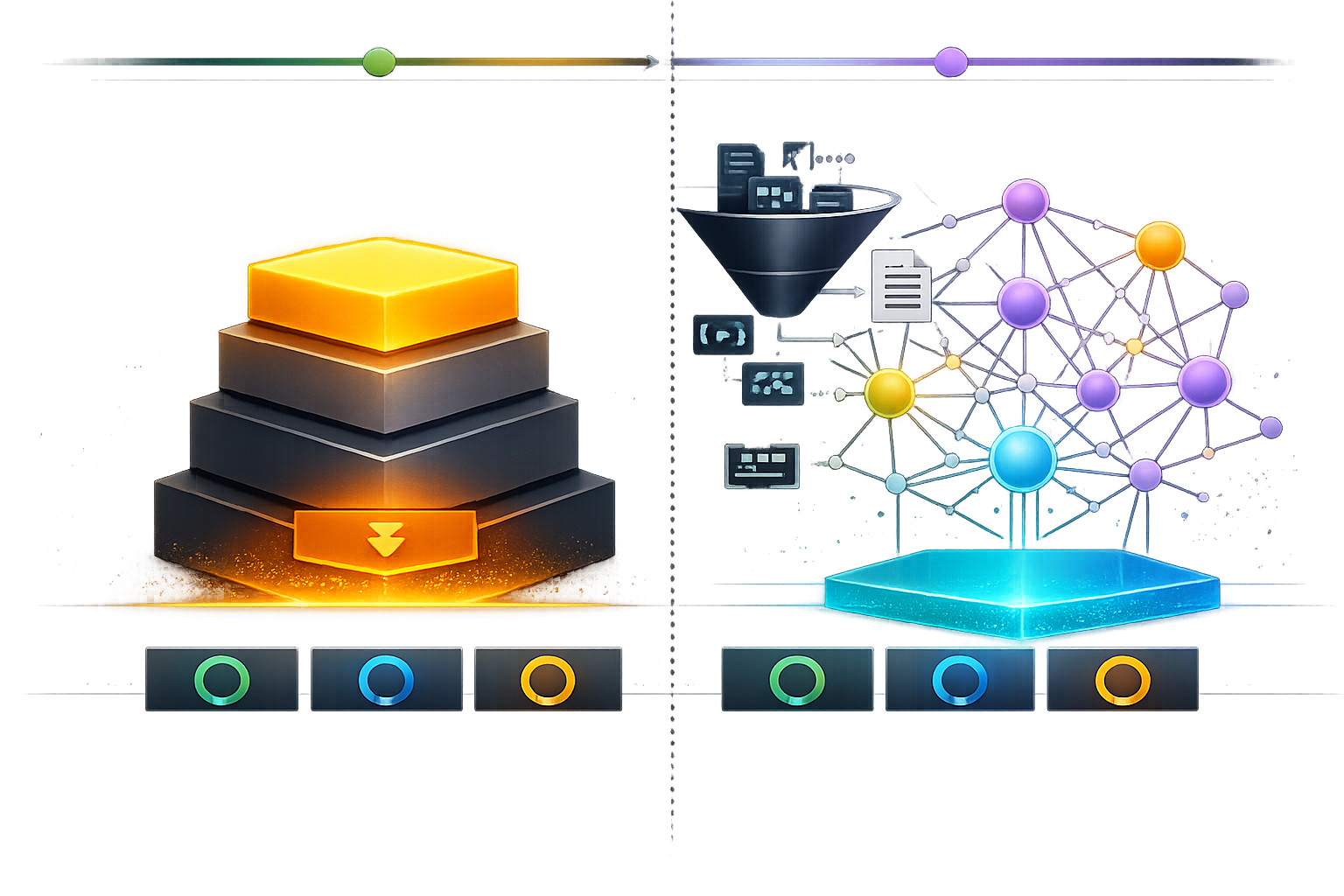

Claude Cowork reframes an AI assistant from “chat that suggests” to an autonomous digital coworker that can plan, execute multi-step work, and interact with files and tools with limited supervision. That shift is valuable for productivity—but it also changes your enterprise risk model. The core question becomes: how do you make an agentic system governable, secure, and trustworthy when it can take actions, touch data, and trigger downstream effects?

This spoke article provides a practical blueprint: define scope, build a structured-data governance control plane the model can’t “reason around,” secure tool use with least privilege, and prove trust with auditability and continuous evaluation.

Key takeaways

Treat an autonomous digital coworker as a new identity with explicit scope, permissions boundaries, and escalation rules—not as a “smart UI.”

Build a governance control plane on structured data (policy schemas, identity attributes, asset inventories) so enforcement is deterministic and auditable.

Secure tool use with per-task short-lived credentials, a tool gateway, and schema validation to reduce blast radius and injection risk.

Make trust measurable: log every decision and tool call, require provenance, and track KPIs like policy violations and unsupported-claim rate.

If you’re building this in production, align this guide with your structured-data foundation and security hardening patterns:

• Structured Data for LLMs (pillar): schemas, validation, and governance patterns

• LLM Security & Prompt Injection Mitigation (pillar): tool-use hardening and safe orchestration

Prerequisites: Define the “digital coworker” scope and the structured data it can touch

Before you debate controls, decide what “Cowork” means inside your organization. An autonomous coworker is not just generating text—it is making decisions, selecting tools, and performing actions. Governance starts with scoping: what tasks it can do, what systems it can access, and what data classes it can read or write.

Clarify autonomy level: assistive vs. agentic execution

Define the autonomy tier you will allow:

- Assistive: drafts, summarizes, and recommends actions, but a human executes in tools.

- Agentic: the coworker executes tool calls (create tickets, update records, run queries) within defined boundaries.

- Autonomous workflows: the coworker chains tasks end-to-end, only escalating on exceptions or high-risk actions.

If the coworker can change state (deploy, delete, send externally, export data), you need enforceable policy gates and approvals. If it only recommends, your primary risks shift toward data leakage and unsupported claims.

Inventory systems, data classes, and allowed actions

Write a one-page “Coworker Charter” that states: (1) supported tasks, (2) systems it can touch, (3) permissions boundaries, and (4) escalation rules. Then map your data classification (public/internal/confidential/restricted) to allowed model actions. Example: confidential data may be readable for summarization but not exportable; restricted data may require explicit approval and be limited to retrieval-only with redaction.

Data opportunity: quantify current AI tool sprawl and exposure risk—e.g., percentage of apps containing sensitive data, number of systems the coworker could access, and baseline AI-related incidents or tickets per quarter. These baselines become your “before” metrics when you introduce policy enforcement.

Choose your structured data sources of truth (catalog, CMDB, IAM, policy store)

Autonomous coworkers need a structured data backbone they can reference and your systems can enforce:

- Identity: users, roles, service accounts, device posture, and session context (IAM/IdP).

- Assets: apps, endpoints, datasets, owners, and environments (CMDB/data catalog).

- Policies: machine-enforceable rules and approvals (policy store).

- Events: audit logs, tool calls, and policy decisions (SIEM/log pipeline).

Step-by-step: Build a governance control plane using structured data (policies the model can’t “reason around”)

The most common governance failure is relying on “prompt rules” (“don’t do X”) instead of enforceable controls. A governance control plane uses structured policies that are evaluated outside the model and applied consistently across tool calls.

Model an “AI policy schema” (who/what/when/why) in structured form

Create a machine-enforceable policy schema (JSON or SQL tables) that captures: actor (user + coworker identity), intent, action, resource, data class, environment, and required approvals. The key is that the policy engine evaluates this schema—so the coworker can’t bypass it with reasoning or rephrasing.

Bind policies to identities, resources, and actions (RBAC/ABAC)

Use RBAC for coarse roles and ABAC for context. With ABAC, the coworker’s permissions are computed from structured attributes (department, role, data sensitivity, device posture, environment). This reduces one-off exceptions and makes access decisions explainable.

Add approval workflows for high-risk actions (human-in-the-loop gates)

Implement “deny by default” with allowlists for tools/actions. Require approvals for state-changing or high-impact actions: deploy, delete, send externally, change permissions, export data, or run expensive queries. Approvals should be tied to specific action parameters (resource, scope, time window), not a generic “yes.”

Track: (1) % of coworker actions mapped to a policy rule, (2) % requiring approval, and (3) reduction in policy exceptions over time. These are leading indicators of whether governance is real or performative.

Step-by-step: Secure tool use and data access (least privilege + verifiable boundaries)

Tool use is where agentic systems become operationally risky. Security controls must be verifiable (enforced by gateways, tokens, and schemas), not dependent on the model “behaving.”

Issue short-lived credentials and scoped tokens per task

Use per-task, time-bound credentials (OAuth scopes, STS tokens) so the coworker can’t retain broad access beyond the job. Bind tokens to the policy decision: if the policy allows “read-only for dataset X for 10 minutes,” the token should encode exactly that.

Enforce network/data egress controls and DLP rules

Route all tool calls through a gateway that enforces structured policies: allowed endpoints, methods, payload constraints, rate limits, and egress destinations. Apply DLP scanning and redaction at the gateway so sensitive content can’t be sent to unapproved channels.

Add prompt/tool injection defenses via structured allowlists

Harden against injection by separating instructions from data, validating tool arguments against strict schemas, and rejecting unrecognized tools/parameters. Treat retrieved content (emails, docs, web pages) as untrusted input that can attempt to override the coworker’s instructions.

Least-privilege controls for an autonomous coworker

| Control | What it prevents | Implementation hint |

|---|---|---|

| Short-lived scoped tokens | Standing access and credential reuse | Mint per task; bind to policy decision and TTL |

| Tool gateway | Direct API calls that bypass policy/DLP | Centralize allowlists, rate limits, and payload validation |

| Schema-validated tool arguments | Tool injection and parameter smuggling | Reject unknown fields; enforce types and ranges |

| Egress + DLP enforcement | Sensitive data exfiltration | Block unapproved domains; redact secrets before send |

Measure: % of tool calls using short-lived tokens, number of blocked egress/DLP events, and mean time to revoke access after policy changes. These metrics help you prove least privilege is actually working.

Step-by-step: Make the coworker auditable and trustworthy (logging, provenance, and evaluation)

Trust in an autonomous coworker is not a vibe—it’s evidence. Your goal is to reconstruct what happened (and why) for any output or action, and to continuously evaluate whether the coworker is improving or drifting.

Log every decision and tool call with structured event schemas

Adopt a structured audit event schema capturing: request ID, user, coworker identity, model version, retrieved sources, tool calls, policy checks, approvals, outputs, and redactions. Logging only prompts is insufficient—actions and policy decisions are what matter for investigations.

Add provenance: citations, data lineage, and “why” fields

Require provenance fields in outputs (source IDs, timestamps, and uncertainty notes) to support internal review and compliance. For tool actions, store the “why” as a structured rationale: the intent, the policy rule matched, and the approval (if any).

Establish trust metrics and continuous evaluation

Define trust KPIs: task success rate, policy violation rate, hallucination/unsupported-claim rate, and human override frequency. Review weekly with owners (Security, IT, and the business function) and feed failures back into policy, retrieval sources, and tool schemas.

Dashboard idea

Create a dashboard with trend lines for policy violations, unsupported claims, and approval latency. Correlate these with incident rates and business outcomes (time saved, ticket deflection). This turns “trust” into an operational SLO.

Common mistakes and troubleshooting: Fix governance gaps before they become incidents

Mistake patterns (over-permissioning, silent tool sprawl, missing logs)

- Granting broad API keys or long-lived tokens “to get it working,” then never tightening them.

- Skipping structured policy binding and relying on prompts or informal guidance.

- Allowing direct tool access without a gateway (no centralized enforcement, no consistent DLP).

- Logging only prompts/outputs, not tool calls, policy checks, approvals, and redactions.

Troubleshooting playbook (when the coworker is blocked, wrong, or risky)

- Blocked action: check policy match first (actor, resource, action, environment), then token scope/TTL, then gateway allowlist/rate limit.

- Wrong output: inspect retrieval sources and freshness; verify citations/provenance; add tests for known failure cases.

- Risky behavior: review tool argument validation failures, injection indicators in retrieved content, and any DLP/egress blocks.

- Repeated exceptions: identify the top failure modes from telemetry (policy mismatch vs token scope vs schema errors) and prioritize fixes by frequency and impact.

Expert quote opportunities and review checkpoints

Add quarterly governance reviews with Security, Legal, and Data owners. Document every exception with an expiry date and compensating controls (e.g., tighter scopes, additional approvals, or extra monitoring). For executive reporting, translate controls into outcomes: fewer incidents, faster approvals, and measurable time saved.

A digital coworker is only as trustworthy as the policies, identity controls, and audit evidence around it. If you can’t explain an action, you can’t govern it.

Anthropic’s Claude models emphasize safety and are increasingly used in enterprise contexts, while features like Cowork push toward more autonomous execution. As autonomy increases, governance must shift from “guidance” to “enforcement + evidence.”

FAQ

Founder of Geol.ai

Senior builder at the intersection of AI, search, and blockchain. I design and ship agentic systems that automate complex business workflows. On the search side, I’m at the forefront of GEO/AEO (AI SEO), where retrieval, structured data, and entity authority map directly to AI answers and revenue. I’ve authored a whitepaper on this space and road-test ideas currently in production. On the infrastructure side, I integrate LLM pipelines (RAG, vector search, tool calling), data connectors (CRM/ERP/Ads), and observability so teams can trust automation at scale. In crypto, I implement alternative payment rails (on-chain + off-ramp orchestration, stable-value flows, compliance gating) to reduce fees and settlement times versus traditional processors and legacy financial institutions. A true Bitcoin treasury advocate. 18+ years of web dev, SEO, and PPC give me the full stack—from growth strategy to code. I’m hands-on (Vibe coding on Replit/Codex/Cursor) and pragmatic: ship fast, measure impact, iterate. Focus areas: AI workflow automation • GEO/AEO strategy • AI content/retrieval architecture • Data pipelines • On-chain payments • Product-led growth for AI systems Let’s talk if you want: to automate a revenue workflow, make your site/brand “answer-ready” for AI, or stand up crypto payments without breaking compliance or UX.

Related Articles

The Complete Guide to Structured Data for LLMs

Learn how to design, validate, and deploy structured data for LLM apps—schemas, formats, pipelines, evaluation, and common mistakes.

Google Core Web Vitals Ranking Factors 2025: What’s Changed and What It Means for Knowledge Graph-Ready Content

2025 news analysis of Google Core Web Vitals as ranking factors: what changed, what matters now, and how speed supports structured data for LLMs.