Google Core Web Vitals Ranking Factors 2025: What’s Changed and What It Means for Knowledge Graph-Ready Content

2025 news analysis of Google Core Web Vitals as ranking factors: what changed, what matters now, and how speed supports structured data for LLMs.

Google Core Web Vitals Ranking Factors 2025: What’s Changed and What It Means for Knowledge Graph-Ready Content

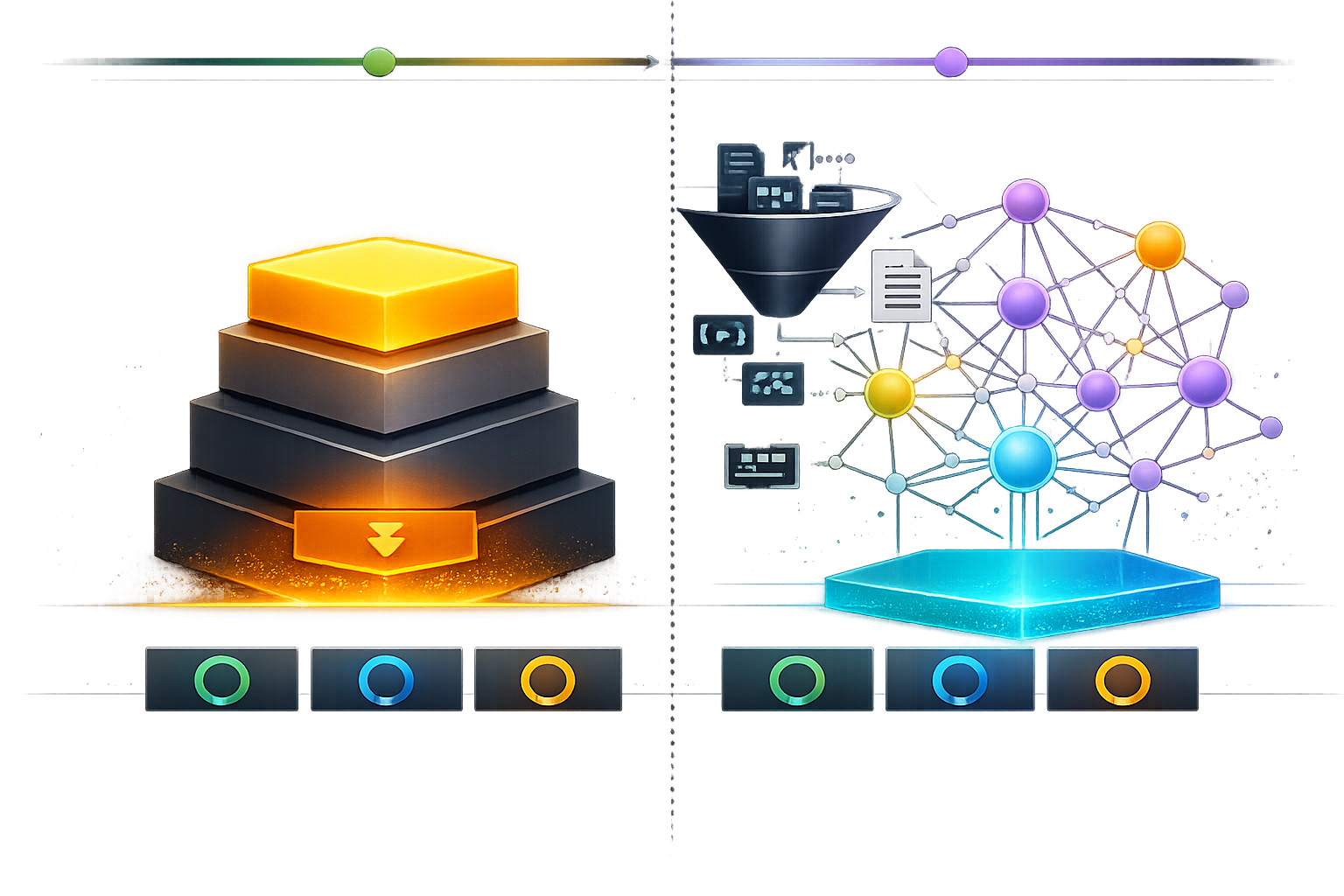

In 2025, Core Web Vitals (CWV) still matter for SEO—but mostly as a page experience differentiator rather than a “magic lever” that overrides relevance. The practical shift is that performance increasingly functions as an enabling layer: it reduces friction for crawling, rendering, and user satisfaction—and that reliability is especially important for content designed to be extracted, summarized, and cited by AI systems. If your goal is Knowledge Graph-ready content (clear entities + typed relationships + structured data), CWV is what helps those signals arrive quickly and consistently enough to be processed.

Treat Core Web Vitals as table stakes for competitive SERPs: they rarely beat stronger topical relevance, but they can reorder similarly relevant pages and improve reliability for rendering-dependent signals (including JSON-LD and on-page entity cues).

What’s the 2025 news hook: Core Web Vitals still matter, but the weighting story is evolving

Timeline: from INP replacing FID to 2025’s “page experience” recalibration

The biggest recent shift was Google’s transition from First Input Delay (FID) to Interaction to Next Paint (INP), moving the focus from a narrow “first interaction” measurement to a broader view of responsiveness across the page lifecycle. In 2025, the broader story is that Google’s page experience system remains in play, but most SEOs observe its impact most clearly in tight, high-competition SERPs—where multiple pages are similarly relevant and credible.

The real question for 2025: ranking factor vs. retrieval and satisfaction signal

When teams ask “Are CWV a ranking factor?” they usually mean “Will improving CWV move my rankings?” In 2025, a more useful question is: does performance improve retrieval, rendering, and satisfaction enough to increase visibility and citations—especially in AI-driven discovery? That’s where CWV shines: faster pages tend to be easier to render, less error-prone, and more pleasant to use, which can influence engagement and reduce pogo-sticking. This aligns with the broader shift toward AI-mediated search experiences described in analyses of AI search engines and changing SEO practices (external: nogood.io).

Core Web Vitals adoption snapshot (illustrative baseline to track in 2025)

Use this as a template: replace with your CrUX/field data by device to monitor pass rates over time. Public datasets like the Chrome UX Report (CrUX) are commonly used for CWV benchmarking.

One caution: some “ranking factor studies” claim large numeric weights for page experience in 2025. Treat those as directional rather than definitive, and validate against your own query sets and templates (external: dollarpocket.com).

How CWV functions as a ranking factor in 2025 (and where it doesn’t)

CWV as a “competitive differentiator”: when performance breaks ties

In practice, CWV tends to matter most when Google has multiple pages that satisfy intent similarly well. If two pages cover the same entities, provide comparable evidence, and match the query equally, the page with better LCP/INP/CLS may be favored—especially on mobile, where resource constraints amplify UX differences.

CWV vs. relevance signals: why topical authority and entities still win

CWV is not a substitute for relevance, expertise, or entity coverage. If your content fails to answer the query or lacks clear entity definitions and relationships, a fast page won’t save it. This is increasingly important as AI systems synthesize answers from sources they deem reliable; broader AI search comparisons highlight that retrieval quality and source trust are central (external: Forbes).

The hidden impact: crawl efficiency, rendering, and indexation reliability

Beyond ranking, CWV improvements can reduce operational SEO risk: fewer rendering delays, fewer layout shifts that scramble DOM-based extraction, and fewer long tasks that block interactivity. That matters for modern pages that rely on JavaScript frameworks and dynamic modules—where Googlebot and other retrieval systems must render, parse, and extract meaning. If your structured data appears late (or inconsistently), you can lose enhancement eligibility or reduce downstream entity extraction confidence.

Mini-study template: CWV medians by ranking bucket (use your own data)

Illustrative example for a small query sample. Replace with measured field data (CrUX/RUM). Method note: correlation ≠ causation; control for intent, brand, and backlink differences.

For teams building AI-citation-ready pages, it’s useful to connect this to broader AI visibility monitoring and integration patterns. For example, tool ecosystems and protocols that standardize how systems access and evaluate content are evolving (see our related briefing on Anthropic’s Model Context Protocol (MCP) gains industry adoption).

The 2025 CWV metrics that move rankings: LCP, INP, CLS (and what Google actually measures)

Google’s Core Web Vitals are still centered on three outcomes: perceived loading speed (LCP), responsiveness (INP), and visual stability (CLS). The thresholds below are widely cited in Google’s documentation and industry tooling; use them as your baseline for “good” performance targets (external: web.dev).

| Metric | Good | Needs improvement | Poor | Common template causes (top 2–3) |

|---|---|---|---|---|

| LCP | ≤ 2.5s | 2.5s–4.0s | > 4.0s | Slow TTFB/server latency; unoptimized hero images; render-blocking CSS/fonts. |

| INP | ≤ 200ms | 200ms–500ms | > 500ms | Long main-thread tasks; heavy JS bundles; third-party scripts (ads/analytics/widgets). |

| CLS | ≤ 0.1 | 0.1–0.25 | > 0.25 | Missing media dimensions; late-loading ads/embeds; font swaps without proper fallback. |

LCP in 2025: what “good” looks like for content-heavy pages

For editorial and B2B content templates, LCP is often dominated by the hero image, featured media, or a large above-the-fold text container. Prioritize faster server response, image compression and sizing (including modern formats), and eliminating render-blocking resources. If your LCP element is unpredictable across templates, you’ll struggle to stabilize performance at scale.

INP: the interaction metric that exposes JavaScript bloat

INP is where modern stacks often fail: hydration costs, large bundles, and third-party tags can block the main thread. The fastest wins typically come from reducing JS shipped per route, deferring non-critical scripts, and breaking up long tasks. INP is also where “feature creep” becomes measurable—every widget and tag competes for responsiveness.

CLS: layout stability as a trust and comprehension signal

CLS is not just aesthetics. When content jumps, users lose reading position, misclick, and trust the page less. For extraction systems, shifting DOM can complicate consistent parsing. The most common fixes are reserving space for media and ads, avoiding injecting banners above existing content, and using stable font loading strategies.

Why CWV supports Structured Data for LLMs: performance as a prerequisite for Knowledge Graph extraction

Rendering and hydration: when structured data is present but not reliably processed

Many teams “have schema” but still see inconsistent enhancement results because the JSON-LD is injected client-side, appears late, or varies across routes. CWV—especially INP—often correlates with how heavy the client-side runtime is. The more your page depends on hydration and delayed scripts, the more likely structured data and entity cues are delivered inconsistently to crawlers and retrieval systems.

Entity clarity + fast delivery: improving AI Retrieval & Content Discovery outcomes

Knowledge Graph-ready content is content where entities (people, products, organizations, concepts) and their relationships are explicit in both markup and surrounding copy. Fast, stable delivery helps those signals be consistently available for parsing—whether by Google’s indexing pipeline or by answer engines that blend web sources with internal knowledge. This is closely related to how modern systems bridge web sources and internal data (see our briefing on Perplexity AI’s internal knowledge search).

Implications for AI Overviews and answer engines: fewer friction points, more consistent citations

If AI systems are deciding what to cite, they tend to reward sources that are consistently retrievable, readable, and structurally clear. Performance issues can cause partial renders, missing modules, or unstable content that reduces extraction confidence. This matters even more as user-generated content and non-traditional sources enter AI citation sets (see our related briefing on the rise of user-generated content in AI citations).

Whenever feasible, ensure critical JSON-LD for entity pages is present in the initial HTML response (SSR or reliable pre-render). Avoid late-injected schema that depends on heavy client-side scripts—those scripts are often the same ones hurting INP.

Before/after plan: server-rendered JSON-LD + CWV improvements (measurement template)

Illustrative targets for a template migration. Track structured data detection via Search Console enhancements and validate with Rich Results Test. Replace with your measured deltas.

This “delivery reliability” theme also shows up in enterprise assistant experiences, where the assistant’s quality is constrained by how cleanly systems can retrieve and interpret content (see our related briefing on Samsung’s Bixby Reborn: a Perplexity-powered AI assistant).

What to do next: 2025 playbook and predictions for teams optimizing for rankings and LLM visibility

90-day action plan: prioritize templates, not one-off pages

Select the 2–4 templates that drive outcomes

Choose templates by traffic and business value (e.g., article, category, product, glossary/entity page). Template-level fixes move CWV at scale and stabilize structured data delivery across thousands of URLs.

Measure field performance, not just lab scores

Use CrUX and/or RUM to segment LCP/INP/CLS by device and template. Confirm whether “poor” pages share the same LCP element, long tasks, or third-party script patterns.

Fix the top bottleneck per metric (keep it surgical)

LCP: reduce TTFB + optimize hero media. INP: cut JS and third-party overhead. CLS: reserve space for dynamic modules. Avoid “performance theater” (micro-optimizations) before removing the biggest offenders.

Validate structured data delivery and entity coverage

Ensure JSON-LD is consistent across templates, present in initial HTML where possible, and supported by on-page entity context (definitions, attributes, relationships). Validate with Rich Results Test and monitor Search Console enhancements.

Monitor outcomes: rankings, crawl stats, and enhancement impressions

Track CWV trends alongside query groups and template cohorts. Watch crawl stats and index coverage for stability improvements. If you publish research-heavy content, also monitor how AI-driven discovery affects traffic patterns (see our related briefing on the impact of AI search engines on publisher traffic).

Expert quote opportunities: performance engineering + technical SEO perspectives

- Performance engineer quote slot: “INP is mostly a main-thread scheduling problem—reduce long tasks and third-party contention.”

- Technical SEO quote slot: “CWV rarely outranks relevance, but it breaks ties when intent match is equal—and it reduces indexing/rendering surprises.”

- Knowledge Graph/ontology quote slot: “Stable templates help keep entity markup and contextual cues consistent, which improves extraction quality and reduces ambiguity.”

Prediction: CWV becomes more “table stakes” as AI-driven discovery rewards reliability

As AI systems increasingly synthesize answers, the winners are often sources that are easy to retrieve, parse, and trust. That doesn’t mean “fastest page wins,” but it does mean unreliable rendering and unstable layouts become a bigger liability. Research on ranking and selection behaviors in LLM-mediated contexts also suggests that bias and selection dynamics can shape what gets surfaced—making consistent technical quality a defensible baseline (external: arXiv).

KPI dashboard concept: baseline vs 90-day targets (template-level)

Set targets per template and device. Secondary KPIs help connect CWV work to crawl/index stability and structured data visibility.

Key Takeaways

In 2025, Core Web Vitals still influence SEO, but mostly as a tie-breaker and quality differentiator—not a replacement for relevance, authority, or entity coverage.

The metrics that matter are LCP (≤2.5s), INP (≤200ms), and CLS (≤0.1). INP is often the biggest blocker on modern JS-heavy sites.

For Knowledge Graph-ready content, CWV is an enabling layer: fast, stable rendering helps structured data and entity cues be delivered consistently for extraction and citation.

Prioritize template-level fixes, validate JSON-LD delivery (prefer SSR/pre-render), and monitor CWV alongside crawl/index stability and Search Console enhancement impressions.

FAQ: Core Web Vitals ranking factors in 2025 (People Also Ask targeting)

Founder of Geol.ai

Senior builder at the intersection of AI, search, and blockchain. I design and ship agentic systems that automate complex business workflows. On the search side, I’m at the forefront of GEO/AEO (AI SEO), where retrieval, structured data, and entity authority map directly to AI answers and revenue. I’ve authored a whitepaper on this space and road-test ideas currently in production. On the infrastructure side, I integrate LLM pipelines (RAG, vector search, tool calling), data connectors (CRM/ERP/Ads), and observability so teams can trust automation at scale. In crypto, I implement alternative payment rails (on-chain + off-ramp orchestration, stable-value flows, compliance gating) to reduce fees and settlement times versus traditional processors and legacy financial institutions. A true Bitcoin treasury advocate. 18+ years of web dev, SEO, and PPC give me the full stack—from growth strategy to code. I’m hands-on (Vibe coding on Replit/Codex/Cursor) and pragmatic: ship fast, measure impact, iterate. Focus areas: AI workflow automation • GEO/AEO strategy • AI content/retrieval architecture • Data pipelines • On-chain payments • Product-led growth for AI systems Let’s talk if you want: to automate a revenue workflow, make your site/brand “answer-ready” for AI, or stand up crypto payments without breaking compliance or UX.

Related Articles

Claude Cowork: What an Autonomous ‘Digital Coworker’ Means for Enterprise AI Governance, Security, and Trust

How to govern an autonomous digital coworker like Claude Cowork with structured data, access controls, audit logs, and trust metrics for secure enterprise use.

The Complete Guide to Structured Data for LLMs

Learn how to design, validate, and deploy structured data for LLM apps—schemas, formats, pipelines, evaluation, and common mistakes.