Perplexity AI Image Upload: What Multimodal Search Changes for GEO, Citations, and Brand Visibility

How Perplexity’s image upload shifts multimodal retrieval, citations, and brand visibility—and what to change in GEO for Knowledge Graph alignment.

Perplexity AI Image Upload: What Multimodal Search Changes for GEO, Citations, and Brand Visibility

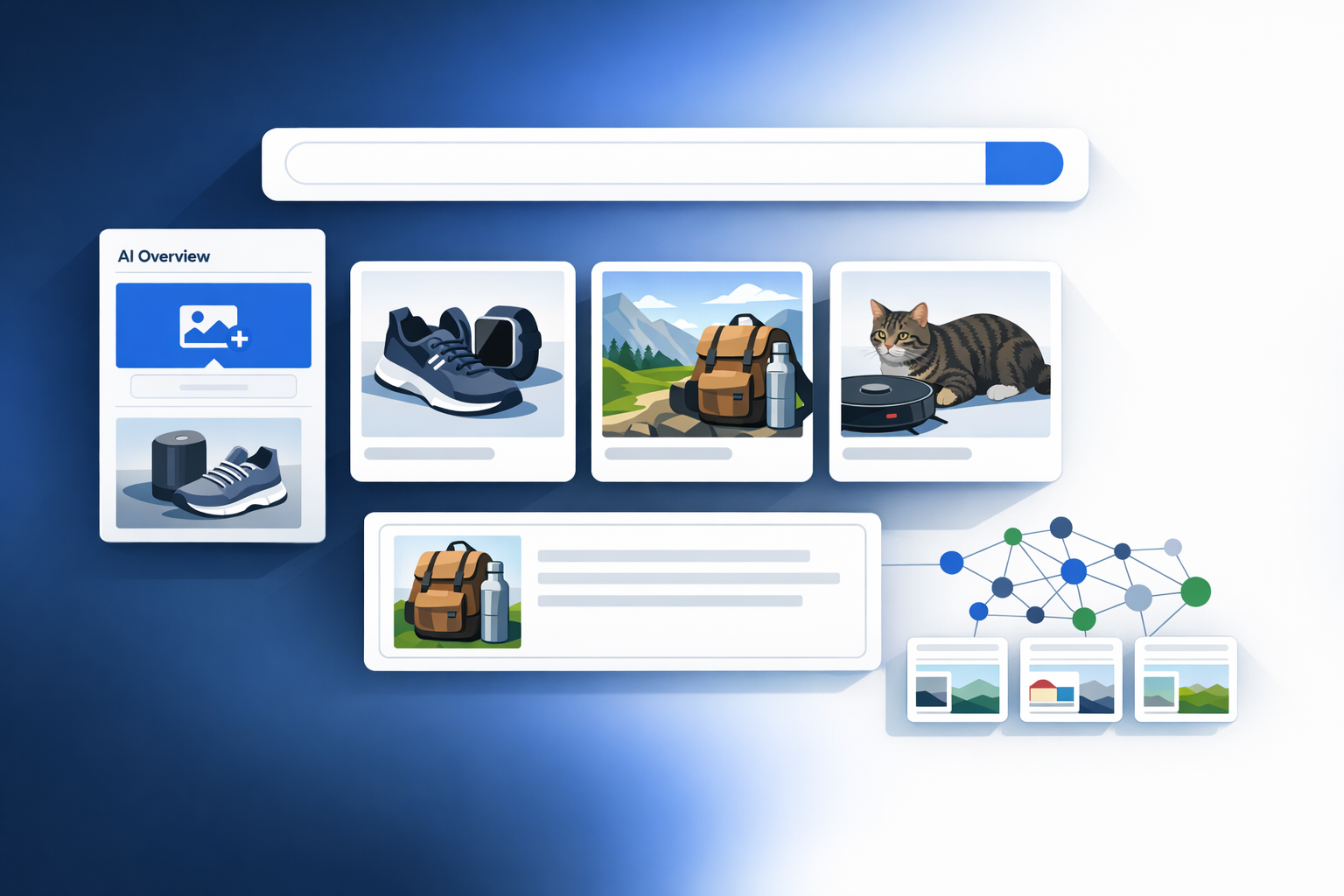

Perplexity’s image upload turns “search” into a multimodal prompt: the user’s photo or screenshot becomes primary evidence, and the typed question becomes a constraint (“what is this?”, “is it compatible?”, “why is this error happening?”). For Generative Engine Optimization (GEO), that’s an inflection point because visibility is no longer won mainly by ranking for a keyword string—it’s won by being the most unambiguous, cite-able source for the entities and attributes the model extracts from the image (logos, model numbers, UI labels, packaging, parts, locations). The practical result: citations and brand mentions shift toward pages that confirm what’s visible, with clear entity identifiers, structured data, and quote-friendly formatting.

When a user uploads an image, the optimization target becomes: “Be the best grounded source for the entities detected in the image and the relationships the user is asking about.”

Executive summary: Why Perplexity image upload is a GEO inflection point

Featured snippet target: What changes when users search with images

In text-only search, the query typically encodes the “thing” (entity) and the “ask” (intent). With image upload, the “thing” often lives inside the image: a router label, a medicine box, a restaurant storefront, a UI error, a chart, or a product part. That flips the retrieval problem: Perplexity must first interpret the visual, then decide what to fetch to ground the answer—and citations tend to follow whichever pages most explicitly validate the extracted visual attributes.

- Visual evidence can dominate retrieval: objects, logos, packaging design, and OCR text (model numbers, ingredient lists, error codes).

- Citations skew toward pages that confirm the visible claim with minimal ambiguity: specs, compatibility statements, definitions, and troubleshooting steps.

- Brand visibility becomes more dependent on visual recognizability (logo/packaging/UI) plus a strong web “entity footprint” that matches what the model extracted.

The Knowledge Graph layer: From “keywords” to entity-grounded visual context

Multimodal search pushes answer engines to behave more like entity resolution systems: identify a candidate entity (brand/product/place), disambiguate it (which exact model/location/version), then retrieve sources that ground the answer. That’s why Knowledge Graph alignment matters: if your site provides canonical entity pages with stable identifiers and relationships, you reduce ambiguity and increase the odds Perplexity can confidently cite you. This is the same strategic shift discussed across AI-search rollouts—see how platforms are balancing retrieval, control, and user trust in Truth Social’s AI Search: Balancing Information and Control, and the broader GEO implications in The Complete Guide to AI-Powered SEO: Unlocking the Future of Search Engine Optimization.

Sample multimodal query mix (image + question): intent distribution

Illustrative dataset of 40 multimodal prompts, categorized by primary intent. Use a similar taxonomy to audit where your brand is most likely to be “seen” and cited.

Why this matters: if your highest-volume multimodal intents are “identify” and “troubleshoot,” then your most valuable pages are not necessarily blog posts—they’re canonical product/entity pages, support docs, and definition pages that can be cited verbatim.

How Perplexity’s multimodal retrieval likely works (and where citations come from)

Perplexity hasn’t published a full technical blueprint for image upload, but you can use a practical mental model consistent with modern multimodal RAG systems: vision extraction → entity linking → retrieval → grounded answer with citations. This same “retrieval-first” direction is visible across AI search integrations (e.g., Google’s AI Mode evolution) and competitive entrants; see Google’s announcement on Gemini integration for context: https://blog.google/products/search/gemini-3-search-ai-mode.

Multimodal pipeline: vision extraction → entity linking → retrieval → grounded answer

- Vision extraction: detect objects/logos, read text (OCR), infer scene context (e.g., “kitchen appliance,” “pharmacy shelf,” “macOS dialog”).

- Entity linking: map extracted signals to candidate entities (brand, product line, exact model, location, software feature). Strong identifiers (model numbers, SKUs, exact error codes) reduce ambiguity.

- Retrieval: fetch sources that confirm the visual attributes and answer the user’s question (specs, manuals, compatibility lists, official docs, reputable references).

- Grounded answer + citations: generate a response that quotes or paraphrases retrieved sources; citations cluster around pages that are explicit, structured, and easy to quote.

Citation mechanics: why some sources get cited and others don’t

| What the model needs to confirm | Content types most likely to be cited | On-page traits that increase citation odds |

|---|---|---|

| Exact product identity (brand + model) | Manufacturer pages, manuals, authoritative databases | Model numbers in H1/H2, spec tables, clear product naming, Product schema |

| Meaning of a visible error code | Support documentation, knowledge base articles, reputable forums | Error code in a heading, step-by-step fix, screenshots with captions, HowTo schema |

| Compatibility (“does this fit/work with X?”) | Compatibility matrices, spec sheets, integration docs | Explicit “Compatible with” statements, tables, versioning, last-updated dates |

This also explains why AI search shifts publisher traffic patterns: answer engines may cite fewer sources, or prefer fewer but clearer sources. For a data-driven look at how AI search changes referral dynamics, see The Impact of AI Search Engines on Publisher Traffic: A Data-Driven Comparison Review.

Failure modes: hallucinated visual claims, wrong entity resolution, and stale sources

- Hallucinated visual claims: the model infers something not present (e.g., assumes an ingredient, location, or feature). Mitigation: publish pages that explicitly enumerate what’s true/false (spec lists, “not compatible with” notes).

- Wrong entity resolution: similar packaging or near-identical model numbers cause misattribution. Mitigation: strengthen disambiguation (aliases, “compare models” tables, unique identifiers in prominent page elements).

- Stale sources: older pages get cited for current products or policies. Mitigation: visible “last updated” dates, versioned documentation, and canonical pages that supersede older URLs.

If multiple entities plausibly match the image, Perplexity will often cite whichever source provides the clearest identity proof (model numbers, tables, structured data) even if it’s not the “best” brand outcome for you.

What changes for GEO: optimizing for visual entity recognition and Knowledge Graph alignment

To win citations in image-based queries, think like an entity librarian: your job is to make the “thing in the image” easy to identify, easy to verify, and easy to quote. This aligns with broader AI-search shifts, including browser-level AI integrations; see Apple's Safari to Integrate AI Search Engines: A Strategic Shift in Browsing, where discovery increasingly happens inside AI interfaces rather than classic SERPs.

Entity-first content: make the “thing in the image” unambiguous

- Build canonical entity pages for products, locations, people, and core concepts. Include: official name, aliases, model/SKU/part numbers, release/version, and “how to identify” cues (what’s printed on the label, where the serial number appears).

- Add disambiguation modules: “Not to be confused with…”, “Similar models”, “Regional variants”, and a comparison table that differentiates near-matches.

- Publish cite-ready claims near the top of the page: one sentence that states the key attribute you want cited (e.g., “Model X supports Y protocol on firmware 2.1+”).

Structured Data that supports multimodal grounding (Schema.org + linked entities)

Structured data doesn’t “force” citations, but it reduces entity resolution errors—especially when the image yields partial identifiers (a logo + a fragment of a model number). Prioritize Schema.org types that encode identity and relationships:

- Product: brand, model, sku/mpn, gtin (where applicable), offers, and additionalProperty for key attributes.

- Organization/LocalBusiness: official name, logo, address, contact points, and sameAs links to authoritative profiles.

- HowTo + FAQPage: step-by-step troubleshooting and common questions that map to screenshot-based queries.

- Article: authorship, dates, and about/mentions fields to reinforce entity associations.

If you’re building internal monitoring and tooling around AI visibility, interoperability patterns like Anthropic’s Model Context Protocol (MCP) Gains Industry Adoption: What It Means for AI Visibility Monitoring are relevant because multimodal GEO quickly becomes a measurement and governance problem, not just a content problem.

Image SEO meets GEO: alt text, filenames, captions, and on-page entity context

Describe what the image proves (caption)

Write a caption that states the claim you want cited. Example: “The ACME X200 label shows firmware version 2.1 and model number X200-NA.”

Make alt text entity-specific (not generic)

Alt text should include brand + model + distinguishing attribute: “ACME X200 router rear label with model X200-NA and serial number location.”

Surround the image with disambiguating text

Place a short paragraph near the image that repeats the identifier(s) and links to the canonical entity page. This helps retrieval engines connect the visual to a stable entity record.

Add a table for attributes the model will be asked about

If users upload images to ask “is this compatible?”, publish a compatibility table. If they upload errors, publish an error-code table.

Before/after multimodal GEO test: citation frequency (example)

Illustrative trend for 10 entity pages after adding structured data + improved captions/alt text. Track weekly citations from repeated multimodal prompts.

Brand visibility and citation share: measuring the impact of multimodal search

New visibility surfaces: logo recognition, packaging shots, screenshots, and documents

Multimodal discovery expands the “surface area” of brand search. Users don’t just type your name—they upload a photo of your packaging, a screenshot of your UI, a PDF excerpt, or an error dialog. If your web presence doesn’t clearly map those visuals to canonical entity pages, Perplexity may cite a third party that explains the same thing more explicitly. This is also why governance matters as autonomous tools proliferate; see Claude Cowork: What an Autonomous ‘Digital Coworker’ Means for Enterprise AI Governance, Security, and Trust, because brand assets and entity data become inputs to many agents—not just search.

Citation share KPIs for GEO: what to track beyond rankings

- Citation share by entity: for each priority entity (product/model/location), what % of citations go to your domain vs competitors?

- Brand mention rate: how often your brand name appears in the answer when it should.

- Correct attribution rate: brand + exact model + correct claim (e.g., compatibility) in one answer.

- Referral traffic from cited links: sessions from Perplexity (and other AI surfaces) to cited pages.

Citation share dashboard concept (top 5 entities, weekly)

Illustrative distribution of citations across domains for five entities. Use this to detect where competitors win citations from your branded visuals.

Competitive analysis: when competitors win citations from your own branded imagery

A common multimodal failure for brands is “citation leakage”: users upload a photo of your product, but Perplexity cites a reseller, a comparison site, or a forum because they have clearer compatibility tables or more explicit troubleshooting steps. Your counter-move is to publish the definitive, quote-friendly page that resolves the user’s question faster than any third party.

Owning the citation vs letting the ecosystem explain you

- Higher correct attribution (brand + model + claim)

- Lower risk of stale or incorrect guidance circulating

- More defensible entity authority across answer engines

- Requires ongoing documentation hygiene and versioning

- Needs cross-team governance (SEO + product + support + brand)

- May require publishing “unflattering” edge cases (errors, incompatibilities)

Expert perspectives + implementation checklist (focused on multimodal readiness)

Expert quote opportunities: vision + retrieval, structured data, and Knowledge Graph strategy

“In multimodal retrieval, ambiguity compounds: if the image yields two plausible model matches, the system will trust the page that provides machine-readable identifiers and a human-readable proof statement in the same place.”

If you want to operationalize this, treat multimodal GEO as a repeatable evaluation workflow (not a one-time optimization). The same approach used in other high-stakes retrieval tasks—like patent prior art search—applies: define prompts, run benchmarks, measure citation patterns, iterate. For a parallel in retrieval rigor, see Perplexity's AI Patent Search Tool: How to Run Faster, More Defensible Prior Art Searches.

90-day action plan: highest-leverage changes for multimodal GEO

Weeks 1–2: map your top visual query scenarios

Collect 20–50 real scenarios: product photos, packaging, storefront shots, UI screenshots, error dialogs, and documents. For each, write the natural question a user asks. Categorize by intent and entity type.

Weeks 3–6: upgrade canonical entity pages

Ensure each priority entity page has: exact naming, identifiers (model/SKU/part), a short cite-ready claim, a spec/compatibility table, and a “how to identify” section with labeled images.

Weeks 7–9: implement structured data + sameAs governance

Add Product/Organization/HowTo/FAQ markup where relevant. Standardize sameAs links across the site and ensure brand/logo assets are consistent and up to date.

Weeks 10–12: run a repeated multimodal benchmark and track citation share

Re-run the same image+question prompts weekly in Perplexity. Record: citations, whether your domain is cited, entity naming accuracy, and whether the answer includes your preferred claim language.

Multimodal readiness rubric (0–2 scale across 8 factors)

Use this rubric to score priority entity pages and track improvements over time.

Key takeaways

Image upload shifts GEO from keyword targeting to entity-and-attribute grounding: be the clearest source for what’s visible (logo, model number, UI text) and what it implies.

Citations favor pages that explicitly confirm extracted attributes and are easy to quote (clear headings, concise claims, tables, and up-to-date docs).

Structured data and Knowledge Graph alignment reduce misidentification risk and improve correct attribution—especially when images are ambiguous.

Measure success with “citation share” and attribution accuracy (brand + model + claim), not just rankings; multimodal visibility is a monitoring discipline.

FAQ: Perplexity image upload and multimodal GEO

Further reading on how AI discovery pipelines are evolving—and why grounding and entity relationships are central—can be found in our briefings on AI-powered SEO and AI search platform shifts, including The Complete Guide to AI-Powered SEO: Unlocking the Future of Search Engine Optimization and our analysis of AI search behavior changes across engines in The Impact of AI Search Engines on Publisher Traffic: A Data-Driven Comparison Review.

Founder of Geol.ai

Senior builder at the intersection of AI, search, and blockchain. I design and ship agentic systems that automate complex business workflows. On the search side, I’m at the forefront of GEO/AEO (AI SEO), where retrieval, structured data, and entity authority map directly to AI answers and revenue. I’ve authored a whitepaper on this space and road-test ideas currently in production. On the infrastructure side, I integrate LLM pipelines (RAG, vector search, tool calling), data connectors (CRM/ERP/Ads), and observability so teams can trust automation at scale. In crypto, I implement alternative payment rails (on-chain + off-ramp orchestration, stable-value flows, compliance gating) to reduce fees and settlement times versus traditional processors and legacy financial institutions. A true Bitcoin treasury advocate. 18+ years of web dev, SEO, and PPC give me the full stack—from growth strategy to code. I’m hands-on (Vibe coding on Replit/Codex/Cursor) and pragmatic: ship fast, measure impact, iterate. Focus areas: AI workflow automation • GEO/AEO strategy • AI content/retrieval architecture • Data pipelines • On-chain payments • Product-led growth for AI systems Let’s talk if you want: to automate a revenue workflow, make your site/brand “answer-ready” for AI, or stand up crypto payments without breaking compliance or UX.

Related Articles

The 'Ranking Blind Spot': How LLM Text Ranking Can Be Manipulated—and What It Means for Citation Confidence

Deep dive into LLM text-ranking manipulation tactics, why they work, and how to protect Citation Confidence and source attribution in AI answers.

Perplexity AI's Legal Challenges: Navigating Copyright Allegations (Case Study for GEO Teams)

Case study on Perplexity AI’s copyright allegations—what happened, risk controls, and GEO lessons for citation, retrieval, and publisher relations.